简述

本文基于3DGS辐射场方法,提出了一种新的点云分布和优化策略框架,从而在维持3DGS visualization质量差别不大的情况下,实现个位数分钟级的训练速度。最终达到了render quality和optimization efficiency的trade-off.

Gaussian importance

由Compressing Volumetric Radiance Fields to 1 MB 可知,大多数体素对渲染结果的影响最小,这表明网格模型中的冗余很大,并且可以在不降低渲染质量的情况下进行剪枝(prune)。

我们将Gaussian importance定义为该视图内累计不透明度前1%的高斯的不透明度。因为这种Gaussian importance对渲染结果影响较大。

aggressive Gaussian densification

depth reinitialization

找到该区域不透明度最大的椭球,将其与椭球光线求交得到的点云的中点作为深度点(depth point)

d ( x ) = d i max mid ( x ) , where i max = arg max i w i d(x) = d^{\text{mid}}_{i_{\text{max}}}(x), \quad \text{where} \, i_{\text{max}} = \arg\max_{i} w_i

d ( x ) = d i max mid ( x ) , where i max = arg i max w i

创建深度贴图,利用类似屏幕后处理的方法重建和合并点云,即depth initalization,从而加快早期的优化迭代。

然而,这种简单的点云优化方法,会导致场景不够泛化,点云数量爆炸和图像质量破坏的问题。

cirtical Gaussian identification

在早期的优化迭代时期,几乎所有的训练过程中的Gaussian处于under-construction状态,单纯地进行clone可以认为是一种降低Gaussian importance的行为,不利于控制高斯数量,所以我们采用了represent the object surface。即cirtical Gaussian identification.

还是找到该区域内不透明度最大的椭球G i m a x G_{i_{max}} G i m a x

我们使用逆分布函数得到Gaussian critcal,其中β p \beta_p β p θ p \theta_p θ p G i m a x G_{i_{max}} G i m a x

θ p = F − 1 ( β p ) \theta_p = F^{-1}(\beta_p)

θ p = F − 1 ( β p )

aggressive Gaussian clone

由于直接将原有的高斯作为点云的结构不利于早期优化,我们借鉴了3D Gaussian Splatting as Markov Chain Monte Carlo ,达到了平滑的高斯密集化。将clone过程应用于所有的critical Gaussian,并且简化了高斯中心的计算过程,将clone数设置为2。即:

P new = P old P_{\text{new}} = P_{\text{old}}

P new = P old

α new = 1 − 1 − α old \quad \alpha_{\text{new}} = 1 - \sqrt{1 - \alpha_{\text{old}}}

α new = 1 − 1 − α old

Σ new = ( α old ) 2 ⋅ ( 2 α new − ( α new ) 2 2 ) − 2 ⋅ Σ old \quad \Sigma_{\text{new}} = (\alpha_{\text{old}})^2 \cdot \left( 2\alpha_{\text{new}} - \frac{(\alpha_{\text{new}})^2}{\sqrt{2}} \right)^{-2} \cdot \Sigma_{\text{old}}

Σ new = ( α old ) 2 ⋅ ( 2 α new − 2 ( α new ) 2 ) − 2 ⋅ Σ old

Overall Aggressive Densification Pipeline

我们保留了3DGS原有的progressive densification,并且在500次迭代开始每250次进行一次critical Gaussian identification&&aggresive Gaussian clone,在2K次迭代开始进行Depth reinitialization。将整个密集化过程缩短至3K迭代,从而将整个30K次的迭代过程缩短至18K次。

visibility Gaussian culling

对于第k k k G i G_i G i j j j w i j k w_{ij}^{k} w i j k I i k I_i^k I i k

具体计算公式为:

I i k = ∑ j = 1 J w i j i I_i^k = \sum_{j=1}^{J} w_{ij}^i

I i k = j = 1 ∑ J w i j i

其中J J J G i G_i G i

可见性掩码V i k V_i^k V i k I I I I i k I_i^k I i k τ \tau τ I i k I_i^k I i k τ \tau τ V i k = 1 V_i^k = 1 V i k = 1 V i k = 0 V_i^k = 0 V i k = 0

V i k = I ( I i k > τ ) V_i^k = \mathcal I(I_i^k > \tau)

V i k = I ( I i k > τ )

确保只有在Gaussian importance前 1%的高斯才被认为是visability的。

并且在500到13K的迭代过程中,通过在每次训练视角下预计算visbility mask,剔除不必要的Gaussian,减小计算开销,加快计算过程。

Implementation

为了减小内存负担,在密集化过程中关闭了球谐系数的迭代优化,并且让训练在一个较小的分辨率下运行。

代码实现细节

cirtical Gaussian identification

这是整篇论文的关键点 ,这里利用分位数函数,选用了一个非常大的分位数0.99,筛选出重要性不足1%的所有高斯,每次选取的importance不同,可以达到非常好的效果,能够剪枝掉很多不必要的高斯。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 ## 逆分布函数 def init_cdf_mask(importance, thres=1.0): importance = importance.flatten() if thres!=1.0: percent_sum = thres vals,idx = torch.sort(importance+(1e-6)) cumsum_val = torch.cumsum(vals, dim=0) split_index = ((cumsum_val/vals.sum()) > (1-percent_sum)).nonzero().min() split_val_nonprune = vals[split_index] non_prune_mask = importance>split_val_nonprune else: non_prune_mask = torch.ones_like(importance).bool() return non_prune_mask

depth reinitalization

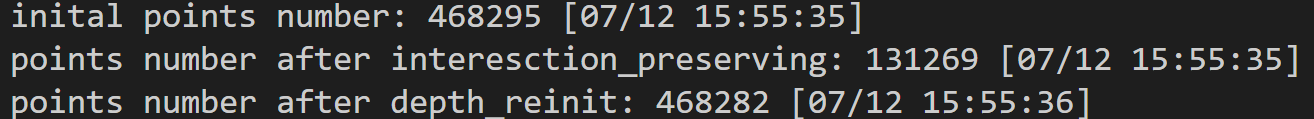

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 if iteration == args.depth_reinit_iter: num_depth = gaussians._xyz.shape[0 ]*args.num_depth_factor gaussians.interesction_preserving(scene, render_simp, iteration, args, pipe, background) pts, rgb = gaussians.depth_reinit(scene, render_depth, iteration, num_depth, args, pipe, background) gaussians.reinitial_pts(pts, rgb) gaussians.training_setup(opt) gaussians.init_culling(len (scene.getTrainCameras())) mask_blur = torch.zeros(gaussians._xyz.shape[0 ], device='cuda' ) torch.cuda.empty_cache()

先找到透明度最大的椭球

获取accum_weights,area_proj,area_max变量,我们可以看到,这里进行了一个简单的光栅化过程,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 def render_simp (viewpoint_camera, pc : GaussianModel, pipe, bg_color : torch.Tensor, scaling_modifier = 1.0 , override_color = None , culling=None ): ''' ... ''' rendered_image, radii, \ accum_weights_ptr, accum_weights_count, accum_max_count = rasterizer.render_simp( means3D = means3D, means2D = means2D, dc = dc, shs = shs, culling = culling, colors_precomp = colors_precomp, opacities = opacity, scales = scales, rotations = rotations, cov3D_precomp = cov3D_precomp) return {"render" : rendered_image, "viewspace_points" : screenspace_points, "visibility_filter" : (radii > 0 ).nonzero(), "radii" : radii, "accum_weights" : accum_weights_ptr, "area_proj" : accum_weights_count, "area_max" : accum_max_count, }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 template <uint32_t CHANNELS>__global__ void __launch_bounds__(BLOCK_X * BLOCK_Y) render_simpCUDA ( const uint2* __restrict__ ranges, const uint32_t * __restrict__ point_list, int W, int H, const float2* __restrict__ points_xy_image, const float * __restrict__ features, float * __restrict__ accum_weights_p, int * __restrict__ accum_weights_count, float * __restrict__ accum_max_count, const float4* __restrict__ conic_opacity, float * __restrict__ final_T, uint32_t * __restrict__ n_contrib, const float * __restrict__ bg_color, float * __restrict__ out_color ) { auto block = cg::this_thread_block (); uint32_t horizontal_blocks = (W + BLOCK_X - 1 ) / BLOCK_X; uint2 pix_min = { block.group_index ().x * BLOCK_X, block.group_index ().y * BLOCK_Y }; uint2 pix_max = { min (pix_min.x + BLOCK_X, W), min (pix_min.y + BLOCK_Y , H) }; uint2 pix = { pix_min.x + block.thread_index ().x, pix_min.y + block.thread_index ().y }; uint32_t pix_id = W * pix.y + pix.x; float2 pixf = { (float )pix.x, (float )pix.y }; for (int j = 0 ; !done && j < min (BLOCK_SIZE, toDo); j++) { contributor++; float2 xy = collected_xy[j]; float2 d = { xy.x - pixf.x, xy.y - pixf.y }; float4 con_o = collected_conic_opacity[j]; float power = -0.5f * (con_o.x * d.x * d.x + con_o.z * d.y * d.y) - con_o.y * d.x * d.y; if (power > 0.0f ) continue ; float alpha = min (0.99f , con_o.w * exp (power)); if (alpha < 1.0f / 255.0f ) continue ; float test_T = T * (1 - alpha); if (test_T < 0.0001f ) { done = true ; continue ; } for (int ch = 0 ; ch < CHANNELS; ch++) C[ch] += features[collected_id[j] * CHANNELS + ch] * alpha * T; if (weight_max<alpha * T) { weight_max=alpha * T; idx_max = collected_id[j]; flag_update = 1 ; } atomicAdd (&(accum_weights_p[collected_id[j]]), alpha * T); atomicAdd (&(accum_weights_count[collected_id[j]]), 1 ); T = test_T; last_contributor = contributor; } } if (flag_update==1 ) { atomicAdd (&(accum_max_count[idx_max]), 1 ); } }

首先进行intersection_preserving对高斯进行筛选,对于每个训练视图,均进行光栅化,这样就能得到高斯对这些视图做的贡献相关信息,在这里,我将做出了最大贡献的高斯命名为有效高斯,剩余的就是被较高权重高斯完全覆盖的高斯,对渲染影响较小,可以舍去。重要性的计算分为outdoor和indoor,outdoor则需要加权,累计单位面积的不透明度贡献值,至于为什么这样做,我认为可能是outdoor空白场景较多,点云稀疏,高斯比较大,容易受到投影面积的影响。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 def interesction_preserving (self, scene, render_simp, iteration, args, pipe, background ): imp_score = torch.zeros(self._xyz.shape[0 ]).cuda() accum_area_max = torch.zeros(self._xyz.shape[0 ]).cuda() views = scene.getTrainCameras_warn_up(iteration, args.warn_until_iter, scale=1.0 , scale2=2.0 ).copy() for view in views: render_pkg = render_simp(view, self, pipe, background, culling=self._culling[:,view.uid]) accum_weights = render_pkg["accum_weights" ] area_proj = render_pkg["area_proj" ] area_max = render_pkg["area_max" ] accum_area_max = accum_area_max+area_max if args.imp_metric=='outdoor' : mask_t=area_max!=0 temp=imp_score+accum_weights/area_proj imp_score[mask_t] = temp[mask_t] else : imp_score=imp_score+accum_weights imp_score[accum_area_max==0 ]=0 non_prune_mask = init_cdf_mask(importance=imp_score, thres=0.99 ) self.prune_points(non_prune_mask==False ) return self._xyz, SH2RGB(self._features_dc+0 )[:,0 ]

接下来就是depth_reinit过程了,得到accum_alpha累计透明度(这个似乎和深度没关系)和out_pts表示的向量信息(方向表示光线方向,模长是深度),且为定义的深度点(光线相交椭球的中点)。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 template <uint32_t CHANNELS>__global__ void __launch_bounds__(BLOCK_X * BLOCK_Y) render_depthCUDA ( const uint2* __restrict__ ranges, const uint32_t * __restrict__ point_list, int W, int H, const float2* __restrict__ points_xy_image, const float * __restrict__ features, const float4* __restrict__ conic_opacity, float * __restrict__ final_T, uint32_t * __restrict__ n_contrib, const float * __restrict__ bg_color, float * __restrict__ out_color, float * __restrict__ out_pts, float * __restrict__ out_depth, float * accum_alpha, int * __restrict__ gidx, float * __restrict__ discriminants, const float * __restrict__ means3D, const glm::vec3* __restrict__ scales, const glm::vec4* __restrict__ rotations, const float * __restrict__ viewmatrix, const float * __restrict__ projmatrix, const glm::vec3* __restrict__ cam_pos ) { float3 p_proj_r = { Pix2ndc (pixf.x, W), Pix2ndc (pixf.y, H), 1 }; float p_hom_x_r = p_proj_r.x*(1.0000001 ); float p_hom_y_r = p_proj_r.y*(1.0000001 ); float p_hom_z_r = (100 -100 *0.01 )/(100 -0.01 ); float p_hom_w_r = 1 ; float3 p_hom_r={p_hom_x_r, p_hom_y_r, p_hom_z_r}; float4 p_orig_r=transformPoint4x4 (p_hom_r, projmatrix_inv); glm::vec3 ray_direction={ p_orig_r.x-ray_origin.x, p_orig_r.y-ray_origin.y, p_orig_r.z-ray_origin.z, }; glm::vec3 normalized_ray_direction = glm::normalize (ray_direction); for (int j = 0 ; !done && j < min (BLOCK_SIZE, toDo); j++) { float2 xy = collected_xy[j]; float2 d = { xy.x - pixf.x, xy.y - pixf.y }; float4 con_o = collected_conic_opacity[j]; float power = -0.5f * (con_o.x * d.x * d.x + con_o.z * d.y * d.y) - con_o.y * d.x * d.y; if (power > 0.0f ) continue ; float alpha = min (0.99f , con_o.w * exp (power)); if (alpha < 1.0f / 255.0f ) continue ; float test_T = T * (1 - alpha); if (test_T < 0.0001f ) { done = true ; continue ; } for (int ch = 0 ; ch < CHANNELS; ch++) C[ch] += features[collected_id[j] * CHANNELS + ch] * alpha * T; glm::vec4 q = rotations[collected_id[j]]; float rot_r = q.x; float rot_x = q.y; float rot_y = q.z; float rot_z = q.w; glm::vec3 temp={ ray_origin.x-means3D[3 *collected_id[j]+0 ], ray_origin.y-means3D[3 *collected_id[j]+1 ], ray_origin.z-means3D[3 *collected_id[j]+2 ], }; glm::vec3 rotated_ray_origin = R * temp; glm::vec3 rotated_ray_direction = R * normalized_ray_direction; glm::vec3 a_t = rotated_ray_direction/(scales[collected_id[j]]*3.0f )*rotated_ray_direction/(scales[collected_id[j]]*3.0f ); float a = a_t .x + a_t .y + a_t .z; glm::vec3 b_t = rotated_ray_direction/(scales[collected_id[j]]*3.0f )*rotated_ray_origin/(scales[collected_id[j]]*3.0f ); float b = 2 *(b_t .x + b_t .y + b_t .z); glm::vec3 c_t = rotated_ray_origin/(scales[collected_id[j]]*3.0f )*rotated_ray_origin/(scales[collected_id[j]]*3.0f ); float c = c_t .x + c_t .y + c_t .z-1 ; float discriminant=b*b-4 *a*c; float depth = (-b/2 /a)/glm::length (ray_direction); if (depth<0 ) continue ; if (weight_max<alpha * T) { weight_max=alpha * T; depth_max=depth; discriminant_max=discriminant; idx_max=collected_id[j]; point_rec = ray_origin+(-b/2 /a)*normalized_ray_direction; } T = test_T; last_contributor = contributor; } } if (inside) { final_T[pix_id] = T; n_contrib[pix_id] = last_contributor; for (int ch = 0 ; ch < CHANNELS; ch++) out_color[ch * H * W + pix_id] = C[ch] + T * bg_color[ch]; for (int ch = 0 ; ch < 3 ; ch++) out_pts[ch * H * W + pix_id] = point_rec[ch]; out_depth[pix_id] = depth_max; accum_alpha[pix_id] = T; discriminants[pix_id] = discriminant_max; gidx[pix_id]=idx_max; } }

选取一定比例的深度点(比例根据累积不透明度和自己的定义调整)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 def depth_reinit (self, scene, render_depth, iteration, num_depth, args, pipe, background ): out_pts_list=[] gt_list=[] views = scene.getTrainCameras_warn_up(iteration, args.warn_until_iter, scale=1.0 , scale2=2.0 ).copy() for view in views: gt = view.original_image[0 :3 , :, :] render_depth_pkg = render_depth(view, self, pipe, background, culling=self._culling[:,view.uid]) out_pts = render_depth_pkg["out_pts" ] accum_alpha = render_depth_pkg["accum_alpha" ] prob=1 -accum_alpha prob = prob/prob.sum () prob = prob.reshape(-1 ).cpu().numpy() factor=1 /(gt.shape[1 ]*gt.shape[2 ]*len (views)/num_depth) N_xyz=prob.shape[0 ] num_sampled=int (N_xyz*factor) indices = np.random.choice(N_xyz, size=num_sampled, p=prob,replace=False ) ''' print(f"Normalized prob: {prob}") print(f"Reshaped and moved to numpy: {prob}") print(f"Factor: {factor}") print(f"N_xyz (Total samples): {N_xyz}") print(f"Number of sampled points: {num_sampled}") print(f"Sampled indices: {indices}") ''' out_pts = out_pts.permute(1 ,2 ,0 ).reshape(-1 ,3 ) gt = gt.permute(1 ,2 ,0 ).reshape(-1 ,3 ) out_pts_list.append(out_pts[indices]) gt_list.append(gt[indices]) out_pts_merged=torch.cat(out_pts_list) gt_merged=torch.cat(gt_list) return out_pts_merged, gt_merged

最终只保留深度点和相应的颜色,之后在进行合并

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 def reinitial_pts (self, pts, rgb ): fused_point_cloud = pts fused_color = RGB2SH(rgb) features = torch.zeros((fused_color.shape[0 ], 3 , (self.max_sh_degree + 1 ) ** 2 )).float ().cuda() features[:, :3 , 0 ] = fused_color features[:, 3 :, 1 :] = 0.0 dist2 = torch.clamp_min(distCUDA2(fused_point_cloud), 0.0000001 ) scales = torch.log(torch.sqrt(dist2))[...,None ].repeat(1 , 3 ) rots = torch.zeros((fused_point_cloud.shape[0 ], 4 ), device="cuda" ) rots[:, 0 ] = 1 opacities = inverse_sigmoid(0.1 * torch.ones((fused_point_cloud.shape[0 ], 1 ), dtype=torch.float , device="cuda" )) self._xyz = nn.Parameter(fused_point_cloud.contiguous().requires_grad_(True )) self._features_dc = nn.Parameter(features[:,:,0 :1 ].transpose(1 , 2 ).contiguous().requires_grad_(True )) self._features_rest = nn.Parameter(features[:,:,1 :].transpose(1 , 2 ).contiguous().requires_grad_(True )) self._scaling = nn.Parameter(scales.requires_grad_(True )) self._rotation = nn.Parameter(rots.requires_grad_(True )) self._opacity = nn.Parameter(opacities.requires_grad_(True )) self.max_radii2D = torch.zeros((self.get_xyz.shape[0 ]), device="cuda" )

可以看到,作者通过控制比例,使得depth_reinit前后点云数量基本相等,但是根据深度贴图实现了点的重构

aggressive Guassian densification

根据论文,clone时保持gaussian的opacity会隐式地放大致密高斯的影响,从而破坏高斯的优化过程

1 2 3 4 5 6 7 8 9 10 11 12 def clone (self, selected_pts_mask ): temp_opacity_old = self.get_opacity[selected_pts_mask] new_opacity = 1 -(1 -temp_opacity_old)**0.5 temp_scale_old = self.get_scaling[selected_pts_mask] new_scaling = (temp_opacity_old / (2 *new_opacity-0.5 **0.5 *new_opacity**2 )) * temp_scale_old new_opacity = torch.clamp(new_opacity, max =1.0 - torch.finfo(torch.float32).eps, min =0.0051 )

Mini-Splatting simplification

simplification 1

对高斯的累计贡献透明度进行剪枝,然后进行重要性采样,设置的采样比例为0.6

1 2 3 4 5 6 7 8 9 10 11 def interesction_sampling (self, scene, render_simp, iteration, args, pipe, background ): imp_score[accum_area_max==0 ]=0 prob = imp_score/imp_score.sum () prob = prob.cpu().numpy() factor=args.sampling_factor N_xyz=self._xyz.shape[0 ] num_sampled=int (N_xyz*factor*((prob!=0 ).sum ()/prob.shape[0 ])) indices = np.random.choice(N_xyz, size=num_sampled, p=prob, replace=False )

simplification 2

进行与depth_reinit之前相同的重要性剪枝

Mini-Splatting2与Mini-Splatting2-D的区别

在进行Mini-Splatting simplification时,剪枝不考虑outdoor和indoor的区别,即全部使用高斯的累计不透明度

总结

这篇论文还是写的不错的,也是我独立精度的第一篇论文。这篇论文的改进主要是针对优化过程进行分析,我认为创新点有(1)使用类似屏幕后处理的方法,增加render_depth和render_simp函数,对视图进行分析,重建深度和累计不透明度。并且(2)提出重要性的概念,让高斯的累计不透明度这个信息充分发挥,同时可以很好地进行visibility Gaussian culling剪枝。

limitation

(1)aggressive densification 在indoor环境下通过importance Gaussian保留下来的Guassian较多,具有较高的storage占用,他这里说是为了保持indoor场景下floor和wall的渲染质量,也确实和重要性剪枝相对应

Simplification过程通过修改cuda实现,必然会导致大量的memory占用

Densification过程通过重要性实现,必然会导致在某些场景上过度Densification

个人思考

mini-splatting是没有实现通用的Densification,这里实现了通用的Densification,可是在storage上面又出现了问题

正如Mini-Splatting simplification所做,尝试在某些特定的阶段进行simplication也许可以进一步达到Gaussian number、render quality和optimization speed的trade-off?

延续mini-splatting的结合高斯权重mask的simplification。visibility culling是一个很容易想到的思路,可是毕竟是三维重建,固定视图流程的culling势必会导致memory overhead。

尝试不同方法的simplification,或者是降低culling频率,总之是尝试更好的和Gaussian相适应的simplification?

这里他说的优化早期迭代,确实是解决了3DGS早期Gaussian under-restruction的问题,

单纯 depth reinitialization 和 aggressive densification似乎比较突兀?